Alibaba Cloud unveils AI, cloud advancements

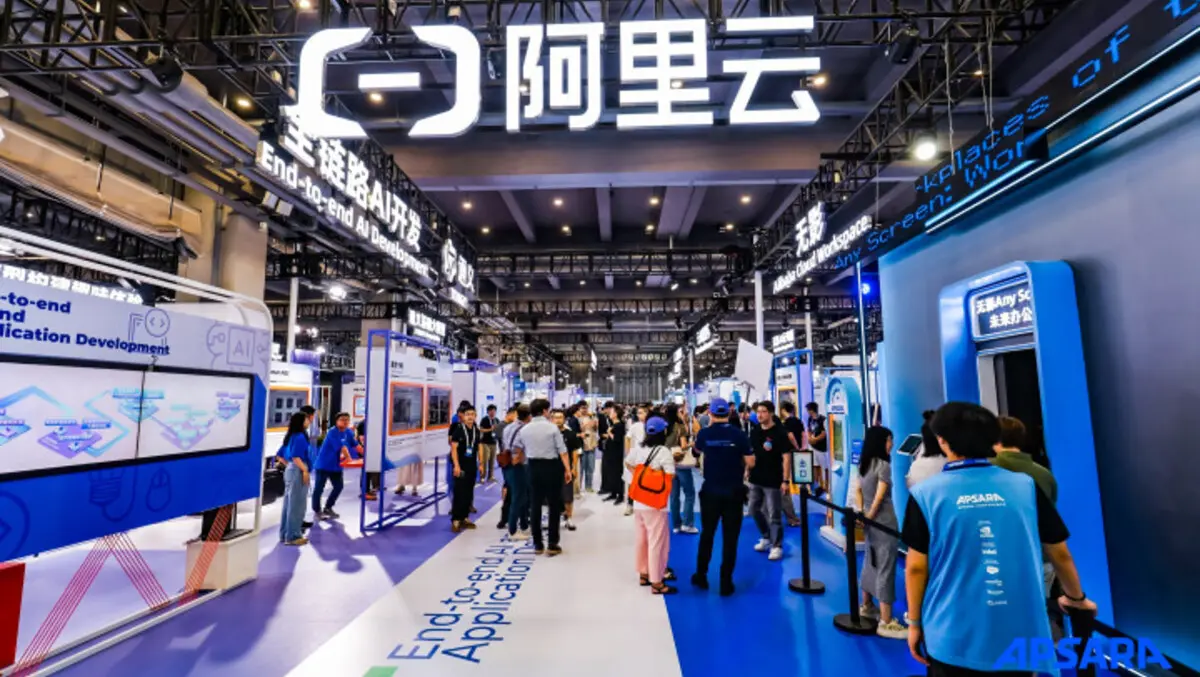

Alibaba Cloud's annual Apsara Conference 2024 is underway in Hangzhou, China, from 19th to 21st September, featuring key announcements in AI and cloud infrastructure.

The conference introduced three significant advancements from the company aimed at enhancing the efficiency, sustainability, and inclusiveness of AI applications.

Key announcements include the release of the Qwen 2.5 model, integration with NVIDIA and Banma for automotive applications, and a partnership with UNESCO-ICHEI to provide digital training to 12,000 educators and students globally by 2025.

Alibaba Cloud has released over 100 of its Qwen 2.5 large language models to the global open-source community. These models range in size from 0.5 to 72 billion parameters, enhancing capabilities in mathematics, coding, and support for over 29 languages. They cater to diverse sectors such as automotive, gaming, and scientific research.

"Alibaba Cloud is investing, with unprecedented intensity, in the research and development of AI technology and the building of its global infrastructure. We aim to establish an AI infrastructure of the future to serve our global customers and unlock their business potential," stated Eddie Wu, Chairman and Chief Executive Officer of Alibaba Cloud Intelligence.

The Qwen model series, which debuted in April 2023, has achieved over 40 million downloads across platforms like Hugging Face and ModelScope. The Qwen 2.5 release will see more than 100 models made open-source, including base models, instruct models, and quantized models across various modalities such as language, audio, and vision, along with specialised code and mathematical models.

In addition to these models, Alibaba Cloud introduced a new text-to-video model as part of its Tongyi Wanxiang large model family. It generates high-quality videos from text instructions in Chinese and English, transforming static images into dynamic videos. The model employs advanced diffusion transformer (DiT) architecture to improve video reconstruction quality.

Alibaba Cloud also revealed its vision-language model, Qwen2-VL, capable of comprehending videos lasting over 20 minutes and supporting video-based question-answering. This model is designed for integration into mobile phones, automobiles, and robots, facilitating the automation of specific operations.

Furthermore, an AI Developer assistant powered by the Qwen model was launched to support programmers in automating tasks such as requirement analysis, coding, and debugging, allowing developers to focus on essential duties and skill enhancement.

The Apsara Conference also marked the announcement of an upgraded AI infrastructure covering data centre architecture, data management, model training, and inferencing:

Alibaba Cloud unveiled its next-generation data centre architecture, CUBE DC 5.0, featuring advanced technologies like a wind-liquid hybrid cooling system, all-direct current power distribution, and a smart management system. The new design aims to increase energy and operational efficiency while reducing deployment times by up to 50%.

An Open Lake Solution was introduced to manage vast amounts of data, integrating big data engines into a single platform to maximise data utility for generative AI applications. This platform achieves efficient resource usage through compute-storage separation, clear data governance, and significant cost and time savings.

The PAI AI Scheduler with integrated model training and inference was launched to enhance computing resource management. This proprietary cloud-native scheduling engine utilises intelligent resource integration and automatic fault recovery, achieving over 90% effective compute utilisation rate.

To assist organisations in managing their data, Alibaba Cloud introduced DMS: OneMeta+OneOps, a platform for unified management of over 40 types of data sources across multiple cloud environments. This platform aims to boost data utilisation rate by 10 times.

Additionally, the company introduced the 9th Generation Enterprise Elastic Compute Service (ECS) instance, boasting a 30% increase in search recommendation speed and a 17% improvement in reading and writing Queries Per Second (QPS) compared to the previous generation.

These updates are designed to enhance the efficiency, sustainability, and inclusiveness of AI applications for customers and partners globally.